DIGITAL TRANSFORMATION LEADERSHIP PRACTICE - DIGITIZE01

Leading in a disrupted world is a new game — and it is changing the way we live and work. Continuous automation cycles and the digital disruption of business models pose major new challenges for organizations and their leaders as they seek to transform their strategies for a digital age.

Digital transformation is the integration of digital technology into all areas of a business, fundamentally changing how you operate and deliver value to customers.

It is also a cultural change that requires organizations to continually challenge the status quo, experiment, and get comfortable with failure. Digital transformation is imperative for all businesses, from the small to the enterprise. Improving customer experience has become a crucial goal of digital transformation.

1. Overview

The rise of digital technologies has accelerated business disruptions in any industry, generating huge opportunities driven by innovation.

Leading digital transformation requires a good understanding of the disruptive technologies but also abilities to manage change and uncertainty generated by the digital revolution itself.

generated by the digital revolution itself.

This is not just about implementing technology and innovation, but also making the needed changes in the organization to drive transformation through new strategic initiatives. To achieve this goal, corporations need leaders able to shape the digital future with interdisciplinary skills such as:

- Business strategy

- Organization change management

- Innovation-based opportunities

Digital leadership demands living by the enduring principles that make for success, and augmenting them with new qualities that enable speed, flexibility, risk-taking, an obsession with customers and new levels of communication inside the organization.

Before we talk about digital leadership or transformation, let’s consider what we mean by digital.

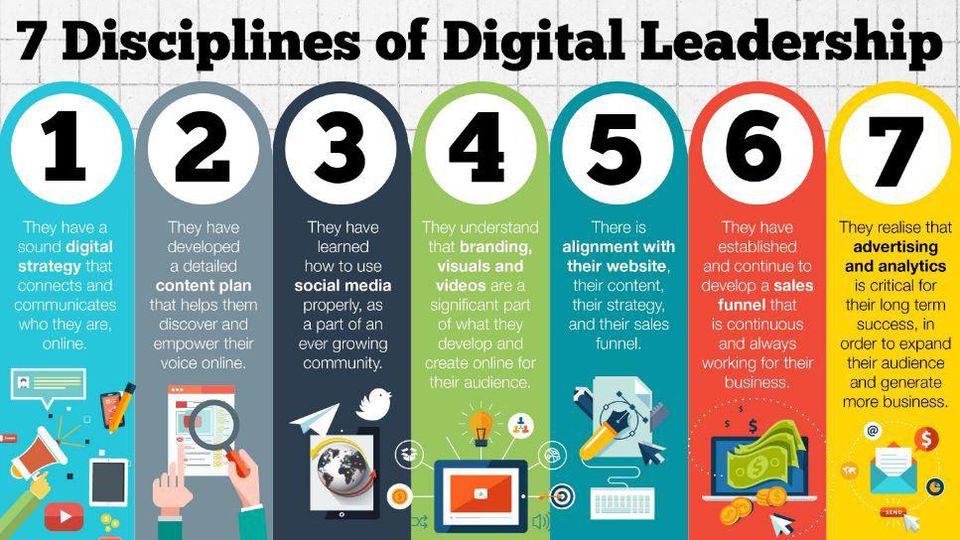

We often think about digital in terms of social media, websites, apps and digital marketing. It’s no wonder we start here. These are the platforms and tools that engage audiences and funders with what we do and who we are as artists, organisations, and leaders.

Another starting point is thinking about digital in terms of innovation, of cutting-edge technologies that can transform the art we make and how it is shared. It is hardly surprising that cultural leaders are fascinated by the potential of the latest technology to imagine new opportunities for art and heritage experiences. Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR), for example, offer a toolbox rich in creative possibilities.

Digital is this, but not only this. Limiting ourselves to thinking about digital in terms of platforms or innovation alone holds back our organisations, our audiences, and our creative practice.

The new reality of digital, described so well by Julie Dodd, is the result of a scale of change “in how people choose to communicate, watch TV, learn, bank, shop and organise their lives” that has been likened to the industrial revolution.

The definition I’ve found most helpful in recognising this new reality for leaders and organisations is that offered by Tom Loosemore, who defines digital as “Applying the culture, practices, processes & technologies of the Internet-era to respond to people’s raised expectations.”

2. Skills Set for Digital Transformation Leadership

2.1 Digital Leadership and Transformation

With the changes that people are making in how they communicate and organise their lives, leaders and organisations in the cultural sector need to keep adapting to be effective in the Internet-era.

So, what does digital leadership look like? What skills and approaches are needed?

The great people at Dot Everyone, with their mission of making the UK’s leaders digitally literate, suggest that “being a leader in the digital age means understanding technology as much as you understand money, HR, or the law.” If leaders have digital understanding, they can then make ”confident, informed and effective decisions for their organisation and their users”.

Skills development is a continuous process for leaders. We can all develop our digital understanding through learning about digital trends and tools, and practicing the skills that enable us to lead well. When it comes to digital, this doesn’t mean that we all need to be technologists and coders. What we do need is enough digital understanding to recognise our skills gaps and identify who we can work with so that our projects and organisations can thrive.

Skilled digital leadership is needed to transform our organisations to be fit for the Internet-era, through a process of building new capacities, structures and ways of working.

Where to start? Being curious about our users and audiences, and open to learning what can be done differently to serve them better, has huge potential to benefit cultural organisations. Agile and Design Thinking approaches to organisational and programme development informed by user needs are being explored by cultural organisations across the country. Understanding what our users need and how our work could meet these needs can make what we do more appealing, relevant, fundable accessible, and cheaper to develop and deliver.

By learning from data and insights about our users and their needs, we can transform cultural leadership and cultural organisations to thrive in the Internet-era, and move away from limiting ourselves to thinking about digital in terms of platforms or innovation alone.

Six Characteristics of Digital Leadership

- Recognising that digital is not always about scale of flashy projects, it’s about transforming people and ways of working

- Developing digital skills across the organisation, not just within a separate department

- Instead of a digital strategy, integrating digital processes and technologies to serve and shape business and artistic strategies

- Providing leaders with a mandate and budget to test and embed digital technology and agile ways of working

- Starting all programmes and projects with use research and user needs, iterating what you do and how you do it in response to feedback

- Inspiring teams and boards about the benefits of digital transformation with tangible proof of concept, even if the successful experiments are small in scale.

2.2 Agile Software Development

Agile software development is an approach to software development under which requirements and solutions evolve through the collaborative effort of self-organizing and cross-functional teams and their customer/end user.

Agile software development is an approach to software development under which requirements and solutions evolve through the collaborative effort of self-organizing and cross-functional teams and their customer(s)/end user(s). It advocates adaptive planning, evolutionary development, early delivery, and continual improvement, and it encourages rapid and flexible response to change.

The term agile (sometimes written Agile) was popularized, in this context, by the Manifesto for Agile Software Development. The values and principles espoused in this manifesto were derived from and underpin a broad range of software development frameworks, including Scrum and Kanban.

There is significant anecdotal evidence that adopting agile practices and values improves the agility of software professionals, teams and organizations; however, some empirical studies have found no scientific evidence.

2.2.1 The Manifesto for Agile Software Development

2.2.1.1 Agile software development values

Based on their combined experience of developing software and helping others do that, the seventeen signatories to the manifesto proclaimed that they value:

- Individuals and Interactions over processes and tools

- Working Software over comprehensive documentation

- Customer Collaboration over contract negotiation

- Responding to Change over following a plan

2.2.1.2 Agile software development principles

The Manifesto for Agile Software Development is based on twelve principles:

- Customer satisfaction by early and continuous delivery of valuable software.

- Welcome changing requirements, even in late development.

- Deliver working software frequently (weeks rather than months)

- Close, daily cooperation between business people and developers

- Projects are built around motivated individuals, who should be trusted

- Face-to-face conversation is the best form of communication (co-location)

- Working software is the primary measure of progress

- Sustainable development, able to maintain a constant pace

- Continuous attention to technical excellence and good design

- Simplicity—the art of maximizing the amount of work not done—is essential

- Best architectures, requirements, and designs emerge from self-organizing teams

- Regularly, the team reflects on how to become more effective, and adjusts accordingly

2.3 Digital Marketing

Digital marketing is the marketing of products or services using digital technologies, mainly on the Internet, but also including mobile phones, display advertising, and any other digital medium.

Digital marketing's development since the 1990s and 2000s has changed the way brands and businesses use technology for marketing. As digital platforms are increasingly incorporated into marketing plans and everyday life, and as people use digital devices instead of visiting physical shops, digital marketing campaigns are becoming more prevalent and efficient.

Digital marketing methods such as search engine optimization (SEO), search engine marketing (SEM), content marketing, influencer marketing, content automation, campaign marketing, data-driven marketing, e-commerce marketing, social media marketing, social media optimization, e-mail direct marketing, Display advertising, e–books, and optical disks and games are becoming more common in our advancing technology. In fact, digital marketing now extends to non-Internet channels that provide digital media, such as mobile phones (SMS and MMS), callback, and on-hold mobile ring tones. In essence, this extension to non-Internet channels helps to differentiate digital marketing from online marketing, another catch-all term for the marketing methods mentioned above, which strictly occur online.

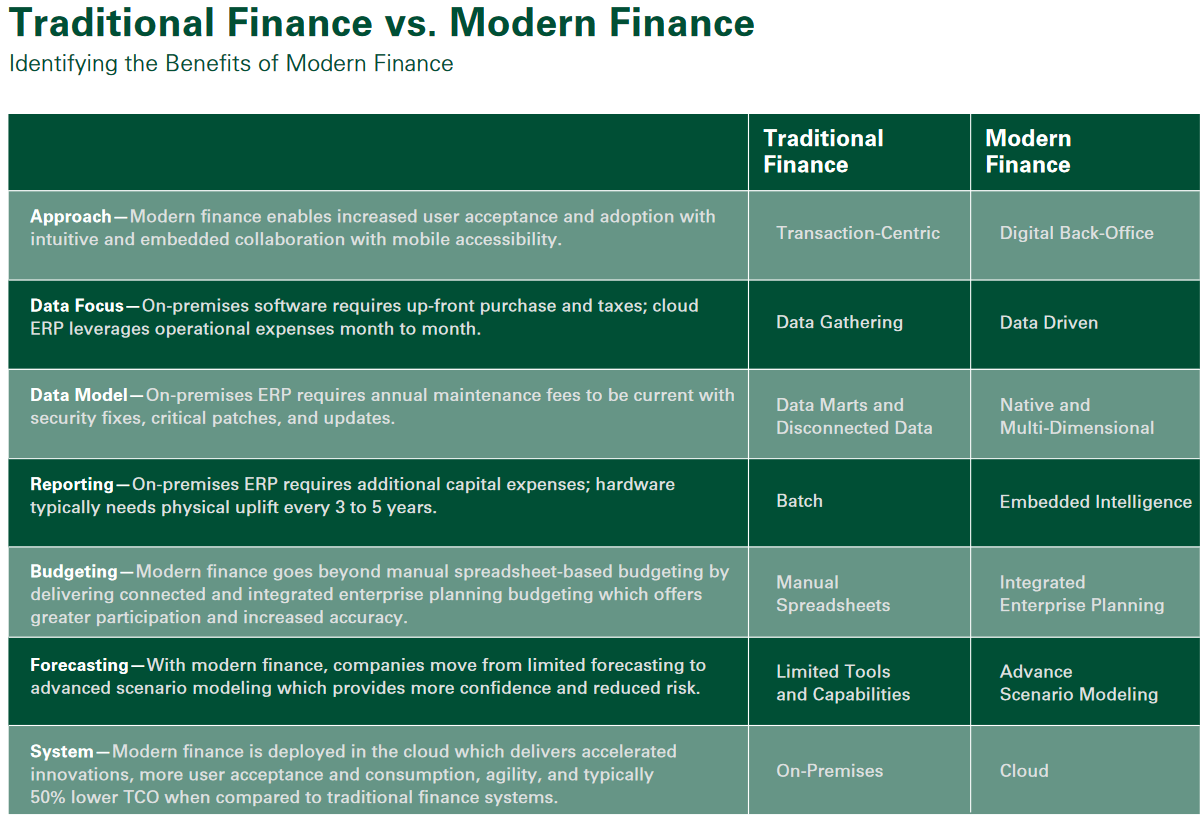

2.4 Modern Finances & Budgeting

Budgeting—Modern finance goes beyond manual spreadsheet-based budgeting by delivering connected and integrated enterprise planning budgeting which offers greater participation and increased accuracy.

2.4.1 Why Modern Finance is Key to Your Business Success

Regardless of your finance systems challenge—infrastructure, processes or resources—there is a clear path to modern finance.

Finance systems have evolved from foundational bookkeeping concepts developed by Venice’s Luca Pacioli in 1494. Pacioli’s published work defined double entry accounting, and he included concepts and definitions for ledgers, assets, receivables, inventories, liabilities, capital, income and expenses. Perhaps because he was also a religious cleric, he discussed compliance and ethics for finance professionals, including his advice to not go to sleep each day until debits equaled credits.

The principles Pacioli developed over 500 years ago—two years after Christopher Columbus first sailed to the western hemisphere—defined the legacy transactional financial systems developed in the last century. It was a great match that married a 15th century book with 20th century technology.

1. Finance in the 21st Century. Then the landscape changed dramatically and suddenly early in this century. Theroughly parallel agility and  efficiency curves began todiverge exponentially.

efficiency curves began todiverge exponentially.

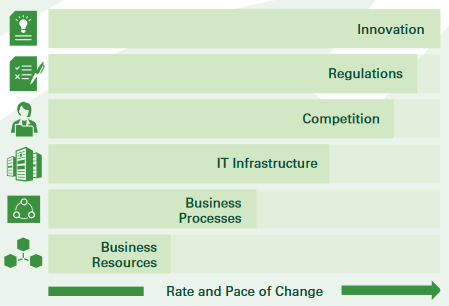

The rate and pace of change involving factors such as innovation (particularly in technology), regulations, and competition (especially those with new and disruptive business models) exceeded the IT infrastructure, business processes and resources related to a company’s ability to respond. The gap was small at first, but over the last decade it dramatically increased. It is now the new normal

This gap creates its own fundamental problem: it drives company leaders to rely on “gut feel” and instinct to make business decisions rather than using rapid, real-time access to accurate data. It is a terrible outcome as this practice delays decision making and introduces errors. The net result erodes operational and management confidence as delays and errors compound negative choices, negatively impact sales, and hurt employee morale.

2. Enter IFRS 15. Add in complex global issues with changing international norms—like IFRS 15—and the next resultis a collection of variables that impact every aspect ofyour company, especially if you are selling and workingacross borders.

3. From Finance Challenges to Opportunities. Since finance is a part of every organization—regardless of size, location and industry—this challenge of keeping up with systemic change becomes an opportunity to boldly leverage disruption rather than hunkering down in fear. Businesses that accept this challenge embrace new and leading technologies, standardize on best practices and invest in the next generation of financial software and their employees.Regardless of your finance systems challenge—infrastructure, processes or resources—there is a clear path to modernfinance that is well defined and ready to support your company’s success.

2.4.1.1 Top 10 Reasons toDeploy Modern Finance

When one or more of these conditions is present in your business:

1. Proliferating non-integrated systems are generating more disparatedata. New technologies, including mobile devices and Internet of Things objects, are generating data that eventually impacts finance.

2. Data imports and integrations are increasing. With new data types and volumes both increasing, more external imports and new integrations are crafted through APIs, business services, and other vehicles

3. System maintenance costs are increasing and they are harder to keep current. The hardware, software licenses and talent, is getting more expensive every year with few if any noticeable enhancements.

4. Reporting is getting harder and more real-time reports arerequested. Despite all the issues, more reports—in real-time,nonetheless—are requested.

5. Spreadsheets are proliferating. With increases in data, legacy system issues, and growing reporting demands, spreadsheets gain additional prominence to complete finance analysis and analytic.

6. Rapid and global company growth into new markets and new geographies. Simultaneously, the business decides to enter new venues and naturally expects finance to be ready, no questions asked.

7. New and changing local and international compliance requirements. As more financial transactions span the globe, more entities are promulgating newer and tougher oversight and disclosure.

8. It is time for an IPO (or other major business model evolution). Finance is asked to support rapidly evolving business model plans like going public in an IPO.

9. The population of next generation employees is increasing. Each month more next-gen employees arrive to begin their careers. Armed with experiences and education built around a mobile, social world.

10. Close processes are longer and more complex, while desire grows for continuous closes. It is today’s ultimate financial dilemma. Traditionalclose processes are getting more difficult, but management wants it to be done faster.

2.4.2 Capabilities of a Modern Finance System

Modern finance requires an integrated and modular set of applications that lets you start anywhere and achieve fast time to value. This approach delivers integrated data utilizing a common schema which leverages extensive historical investments in legacy Oracle and non-Oracle transactional systems. Three key financial capabilities form the foundation of any modern finance system:

1. Management Insight.Enterprise Performance Management solutions provide you with the ability to align strategy with plans and execution, providing management insight needed to guide your business forward with:

- Intuitive web interface with full integrations to popular office productivity tools

- Virtually zero training with included online help and tutorials

- Powerful and scalable modeling engines

- Secure, role-based collaboration for account reconciliation and narrative reporting

- Out-of-the-box support for best practices including rolling forecasts and multi-currency

2. Compliance and Control.Risk and Compliance Management strengthens internal controls by optimizing controls within and outside your financial processes. Capabilities include:

- Centralized and secure repository of risks and controls

- Automated assessments of internal controls compliance

- Enterprise-wide visibility for confident control certifications

- Continuously monitor payables and travel expense transactions for exceptions

- Detect duplicate supplier invoices before paymentsare made

3. Operational Agility.Financial Management establishes a foundation for local, regional and global operational agility. This provides a digital back office to meets the needs of today’s business by incorporating:

- End-to-end best practice business processes

- Full multi-GAAP, multi-currency and multi-entity support

- Embedded analytics for self-monitoring processes inwork areas

- Secure and in-context collaboration and workflow

- Fully integrated out-of-the-box invoice imaging

Besides extensive finance function capabilities, a modern system also requires a rich digital core that is easy to use, embraces social communications platforms, delivers embedded analytics and is mobile accessible across any sized device. It can integrate easily with embedded tools, offers enterprise-grade security and is deployed rapidly for fast time to value.

2.4.3 Finance Challenges and Opportunities

There is no doubt about disruption being a significant force across the globe for every business and industry. The popular phrase—you are either a disruptor or being disrupted—also applies to finance organizations as company leadership require a move from operational efficiency to operational agility as the new business paradigm. This shift is driven by three challenges common to every business regardless of geography or industry:

1. With Finance in the 21st Century, Data-Driven Decisions Become Elusive. The default tool for many companies is spreadsheets. Too often finance teams find their current systems cannot analyze their data so a collective decision is made to start a new spreadsheet. As spreadsheets proliferate and variations multiple with no control, decisions they drive become harder to reach and might introduce error or doubt.

2. Problems with Compliance Complacency. Additional scrutiny is required as new regulations and standards evolve. For example, as IFRS 15is deployed, software and systems need to be updated, staff skills updated, and processes changed. It is not a simple task. Add in a company’s growth into new markets in new geographies and the ability for accurate and meaningful compliance becomes more difficult.

3. Complex Legacy Systems and Environment. Modern activities require modern systems. Legacy on-premises software solutions carry high fixed costs coupled to large implementation and maintenance expenses heavily weighted toward cap-ex. Delayed on-premises projects have a cascading effect that further impacts moving toward data-driven decision making and improving compliance performance.

These challenges highlight why organizations move to the cloud. As legacy on-premises systems are converted to op-ex cloud solutions, finance departments embrace software that is always current, global and innovative. As the right technology for modern finance, both direct and indirect costs are reduced. Working in the cloud unleashes collaboration which in turn delivers more business value.

2.4.4 Building a Modern Finance Operation

Now is the time to say goodbye to process-focused finance models. Digital has blown it up. Traditional finance activities— including transaction processing, control and risk management, and reporting, analytics and forecasting—have been redesigned and reconfigured. The cloud elevates finance to the insight engine for your business using three essential elements:

1. Management Insight with Analytics Competency Centers.Analytics “gurus” do more than analyze financials. They assess product, customer, expense and project trends. Employees use self-service to explore data “in the moment” to understand the financial impact of operational events and decisions. No more relying on finance to do it for them. And no more trips to IT begging for custom reports.

2. Compliance and Control with Communications and Control Centers. These centers are focused on control, compliance, communications and risk management to consolidate the fundamentals of finance operations: statutory accounting, compliance, tax, treasury and investor relations. They are nimble, responsive and cost-effective, aligning specialized teams around streamlined work processes.For example, one telecom provider is consolidating its fragmented income, property and sales tax organizations. A digital data warehouse automates most routine tax reporting and compliance. This way tax professionals can now focus on optimizing the tax structure of the organization to better support the business strategy.

3. Operational Agility Leveraging Integrated Business Services. These teams deliver complete services to employees, customers and suppliers across functions. They bundle accounting and transaction processing typically performed by finance with tasks from other business areas. Global consulting firm Accenture estimates that by 2020 more than 80 percent of traditional finance services will be delivered by cross-functional teams working within integrated business services teams.

2.4.5 Example - Deploying Modern Finance with Oracle:

Together with Oracle’s other modern applications, finance is delivered in a single cloud that is enterprise-grade and ready to grow your business today. Oracle’s modern finance solution is built on a robust union of platform and applications that is fully integrated a plethora of solutions covering supply chain management, human resources, sales, marketing and customer activities.

These platform and application characteristics are:

1. Modern-Standards Delivered with Modern Best Practice

Oracle leverages a growing library of 183 published Modern Best Practice processes delivered through secure and highly scalable Oracle-owned and operated cloud platforms. With business applications that are fully integrated and connected, organizations quickly get to work with personalized—never customized—modern and up-to-date software emboldened with revolutionary reporting (including embedded analytics) delivered around intuitive and socially enabled user experiences.

2. Modern Economics Approach

There is a significant economic benefit when you modernize finance in the cloud and move from a cap-ex approach to op-ex. Oracle and its partners offer research and tools to determine the financial advantages of a cloud finance project and services.

- Cloud Solutions Deliver Savings

- Cloud ROI Calculator

- Finance Self-Assessment Tool

- Cloud Marketplace with Partner Solutions

3. Modern Business Applications

For your move to modern finance, Oracle delivers a comprehensive collection of cloud applications that leverage integrated ERP and EPM solutions to deliver real-time answers to your business questions.

- Oracle Enterprise Performance Management (EPM) Cloud

- Oracle Financials Cloud

- Oracle Revenue Management Cloud

- Oracle Risk Management Cloud

- Oracle Accounting Hub Cloud

2.5 Legal Tech

Legal technology, also known as Legal Tech, refers to the use of technology and software to provide legal services. Legal Tech companies are generally startups founded with the purpose of disrupting the traditionally conservative legal market.

Legal technology traditionally referred to the application of technology and software to help law firms with practice management, document storage, billing, accounting and electronic discovery. Since 2011, Legal Tech has evolved to be associated more with technology startups disrupting the practice of law by giving people access to online software that reduces or in some cases eliminates the need to consult a lawyer, or by connecting people with lawyers more efficiently through online marketplaces and lawyer-matching websites.

The legal industry is widely seen to be conservative and traditional, with Law Technology Today noting that "in 50 years, the customer experience at most law firms has barely changed". Reasons for this include the fact law firms face weaker cost-cutting incentives than other professions (since they pass disbursements directly to their client) and are seen to be risk averse (as a minor technological error could have significant financial consequences for a client).

However, the growth of the hiring by businesses of in-house counsel and their increasing sophistication, together with the development of email, has led to clients placing increasing cost and time pressure on their lawyers. In addition, there are increasing incentives for lawyers to become technologically competent, with the American Bar Association voting in August 2012 to amend the Model Rules of Professional Conduct to require lawyers to keep abreast of "the benefits and risks associated with relevant technology", and the saturation of the market leading many lawyers to look for cutting-edge ways to compete. The exponential growth in the volume of documents (mostly email) that must be reviewed for litigation cases has greatly accelerated the adoption of technology used in eDiscovery, with elements of machine language and artificial intelligence being incorporated and cloud-based services being adopted by law firms.[citation needed]

Investment in Legal Tech is predominantly focused in the United States with more than $254 million invested in 2014 in the United States.

Stanford Law School has started CodeX, the Center for Legal Informatics, an interdisciplinary research center, which also incubates companies started by law students and computer scientists. Some companies that have come out of the program include Lex Machina and Legal.io.

2.5.1 Legal Tech Key Areas

Traditional areas of Legal Tech include:

- Accounting

- Billing

- Document automation

- Document storage

- Electronic discovery

- Legal research

- Practice management

More recent areas of growth in Legal Tech focus on:

- Providing tools or a marketplace to connect clients with lawyers

- Providing tools for consumers and businesses to complete legal matters by themselves, obviating the need for a lawyer

- Data and contract analytics

- Use of legally binding digital signature, which helps verify the digital identity of each signer, maintains the chain of custody for the documents and can provide audit trails

- Automation of legal writing or other substantive aspects of legal practice

- Platforms for succession planning i.e Will writing, via online applications

- Providing tools to assist with immigration document preparation in lieu of hiring a lawyer.

2.5.2 Notable Legal Tech companies

Russia

Legal research:

- Consultant Plus

- Garant

United Kingdom

- Lexoo

- Practical Law Company

United States

Seed round:

- Legal.io

- LegalEase

Series A and beyond:

- Lex Machina

- Ravel Law

- Rocket Lawyer

- UpCounsel

- Wevorce

Other legal technology companies:

- Bloomberg Law

- Legalzoom

- LexisNexis

- Recommind

- TransPerfect

- Westlaw

2.6 Disruptive Technologies

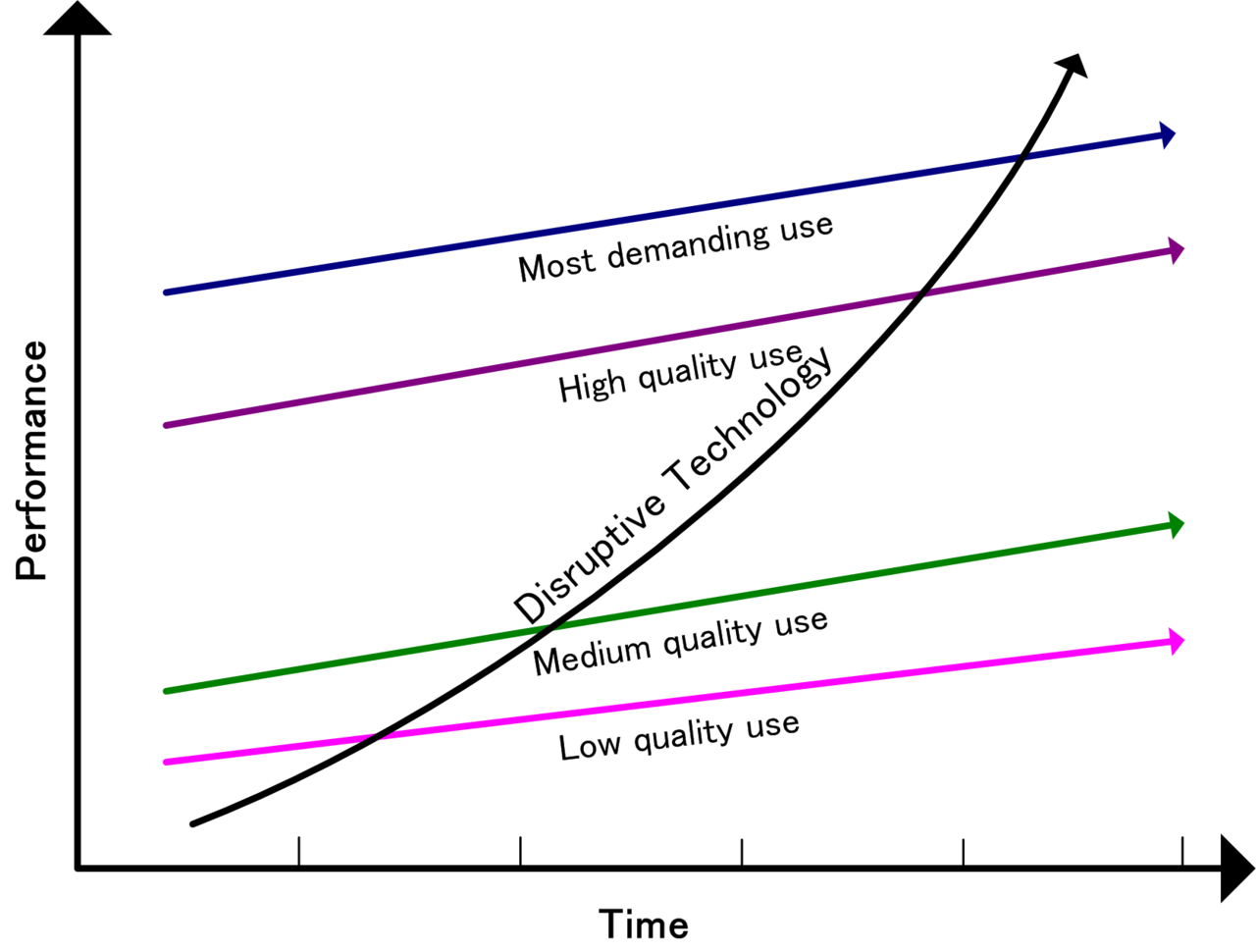

In business theory, a disruptive innovation is an innovation that creates a new market and value network and eventually disrupts an existing market and value network, displacing established market-leading firms, products, and alliances. The term was defined and first analyzed by the American scholar Clayton M. Christensen and his collaborators beginning in 1995, and has been called the most influential business idea of the early 21st century.

Not all innovations are disruptive, even if they are revolutionary. For example, the first automobiles in the late 19th century were not a disruptive innovation, because early automobiles were expensive luxury items that did not disrupt the market for horse-drawn vehicles. The market for transportation essentially remained intact until the debut of the lower-priced Ford Model T in 1908.[5] The mass-produced automobile was a disruptive innovation, because it changed the transportation market, whereas the first thirty years of automobiles did not.

Disruptive innovations tend to be produced by outsiders and entrepreneurs in startups, rather than existing market-leading companies. The business environment of market leaders does not allow them to pursue disruptive innovations when they first arise, because they are not profitable enough at first and because their development can take scarce resources away from sustaining innovations (which are needed to compete against current competition). A disruptive process can take longer to develop than by the conventional approach and the risk associated to it is higher than the other more incremental or evolutionary forms of innovations, but once it is deployed in the market, it achieves a much faster penetration and higher degree of impact on the established markets.

Beyond business and economics disruptive innovations can also be considered to disrupt complex systems, including economic and business-related aspects.

2.6.1 History of Distruptive Technologies

The term disruptive technologies was coined by Clayton M. Christensen and introduced in his 1995 article Disruptive Technologies: Catching the Wave, which he cowrote with Joseph Bower. The article is aimed at management executives who make the funding or purchasing decisions in companies, rather than the research community. He describes the term further in his book The Innovator's Dilemma. Innovator's Dilemma explored the cases of the disk drive industry (which, with its rapid generational change, is to the study of business what fruit flies are to the study of genetics, as Christensen was advised in the 1990s[11]) and the excavating equipment industry (where hydraulic actuation slowly displaced cable-actuated movement). In his sequel with Michael E. Raynor, The Innovator's Solution, Christensen replaced the term disruptive technology with disruptive innovation because he recognized that few technologies are intrinsically disruptive or sustaining in character; rather, it is the business model that the technology enables that creates the disruptive impact. However, Christensen's evolution from a technological focus to a business-modelling focus is central to understanding the evolution of business at the market or industry level. Christensen and Mark W. Johnson, who cofounded the management consulting firm Innosight, described the dynamics of "business model innovation" in the 2008 Harvard Business Review article "Reinventing Your Business Model". The concept of disruptive technology continues a long tradition of identifying radical technical change in the study of innovation by economists, and the development of tools for its management at a firm or policy level.

The term “disruptive innovation” is misleading when it is used to refer to a product or service at one fixed point, rather than to the evolution of that product or service over time.

In the late 1990s, the automotive sector began to embrace a perspective of "constructive disruptive technology" by working with the consultant David E. O'Ryan, whereby the use of current off-the-shelf technology was integrated with newer innovation to create what he called "an unfair advantage". The process or technology change as a whole had to be "constructive" in improving the current method of manufacturing, yet disruptively impact the whole of the business case model, resulting in a significant reduction of waste, energy, materials, labor, or legacy costs to the user.

In keeping with the insight that what matters economically is the business model, not the technological sophistication itself, Christensen's theory explains why many disruptive innovations are not "advanced technologies", which a default hypothesis would lead one to expect. Rather, they are often novel combinations of existing off-the-shelf components, applied cleverly to a small, fledgling value network.

Online news site TechRepublic proposes an end using the term, and similar related terms, suggesting that it is overused jargon as of 2014.

2.6.2 Theory of Distruptive Technologies

The current theoretical understanding of disruptive innovation is different from what might be expected by default, an idea that Clayton M. Christensen called the "technology mudslide hypothesis". This is the simplistic idea that an established firm fails because it doesn't "keep up technologically" with other firms. In this hypothesis, firms are like climbers scrambling upward on crumbling footing, where it takes constant upward-climbing effort just to stay still, and any break from the effort (such as complacency born of profitability) causes a rapid downhill slide. Christensen and colleagues have shown that this simplistic hypothesis is wrong; it doesn't model reality. What they have shown is that good firms are usually aware of the innovations, but their business environment does not allow them to pursue them when they first arise, because they are not profitable enough at first and because their development can take scarce resources away from that of sustaining innovations (which are needed to compete against current competition). In Christensen's terms, a firm's existing value networks place insufficient value on the disruptive innovation to allow its pursuit by that firm. Meanwhile, start-up firms inhabit different value networks, at least until the day that their disruptive innovation is able to invade the older value network. At that time, the established firm in that network can at best only fend off the market share attack with a me-too entry, for which survival (not thriving) is the only reward.

Christensen defines a disruptive innovation as a product or service designed for a new set of customers.

Generally, disruptive innovations were technologically straightforward, consisting of off-the-shelf components put together in a product  architecture that was often simpler than prior approaches. They offered less of what customers in established markets wanted and so could rarely be initially employed there. They offered a different package of attributes valued only in emerging markets remote from, and unimportant to, the mainstream.

architecture that was often simpler than prior approaches. They offered less of what customers in established markets wanted and so could rarely be initially employed there. They offered a different package of attributes valued only in emerging markets remote from, and unimportant to, the mainstream.

Christensen argues that disruptive innovations can hurt successful, well-managed companies that are responsive to their customers and have excellent research and development. These companies tend to ignore the markets most susceptible to disruptive innovations, because the markets have very tight profit margins and are too small to provide a good growth rate to an established (sizable) firm.[16] Thus, disruptive technology provides an example of an instance when the common business-world advice to "focus on the customer" (or "stay close to the customer", or "listen to the customer") can be strategically counterproductive.

While Christensen argued that disruptive innovations can hurt successful, well-managed companies, O'Ryan countered that "constructive" integration of existing, new, and forward-thinking innovation could improve the economic benefits of these same well-managed companies, once decision-making management understood the systemic benefits as a whole.

Christensen distinguishes between "low-end disruption", which targets customers who do not need the full performance valued by customers at the high end of the market, and "new-market disruption", which targets customers who have needs that were previously unserved by existing incumbents.

"Low-end disruption" occurs when the rate at which products improve exceeds the rate at which customers can adopt the new performance. Therefore, at some point the performance of the product overshoots the needs of certain customer segments. At this point, a disruptive technology may enter the market and provide a product that has lower performance than the incumbent but that exceeds the requirements of certain segments, thereby gaining a foothold in the market.

In low-end disruption, the disruptor is focused initially on serving the least profitable customer, who is happy with a good enough product. This type of customer is not willing to pay premium for enhancements in product functionality. Once the disruptor has gained a foothold in this customer segment, it seeks to improve its profit margin. To get higher profit margins, the disruptor needs to enter the segment where the customer is willing to pay a little more for higher quality. To ensure this quality in its product, the disruptor needs to innovate. The incumbent will not do much to retain its share in a not-so-profitable segment, and will move up-market and focus on its more attractive customers. After a number of such encounters, the incumbent is squeezed into smaller markets than it was previously serving. And then, finally, the disruptive technology meets the demands of the most profitable segment and drives the established company out of the market.

"New market disruption" occurs when a product fits a new or emerging market segment that is not being served by existing incumbents in the industry.

The extrapolation of the theory to all aspects of life has been challenged,[18][19] as has the methodology of relying on selected case studies as the principal form of evidence. Jill Lepore points out that some companies identified by the theory as victims of disruption a decade or more ago, rather than being defunct, remain dominant in their industries today (including Seagate Technology, U.S. Steel, and Bucyrus). Lepore questions whether the theory has been oversold and misapplied, as if it were able to explain everything in every sphere of life, including not just business but education and public institutions.

2.6.2 Distruptive Technologies 101

In 2009, Milan Zeleny described high technology as disruptive technology and raised the question of what is being disrupted. The answer, according to Zeleny, is the support network of high technology.[20] For example, introducing electric cars disrupts the support network for gasoline cars (network of gas and service stations). Such disruption is fully expected and therefore effectively resisted by support net owners. In the long run, high (disruptive) technology bypasses, upgrades, or replaces the outdated support network. Questioning the concept of a disruptive technology, Haxell (2012) questions how such technologies get named and framed, pointing out that this is a positioned and retrospective act.

Technology, being a form of social relationship,[citation needed] always evolves. No technology remains fixed. Technology starts, develops, persists, mutates, stagnates, and declines, just like living organisms.[23] The evolutionary life cycle occurs in the use and development of any technology. A new high-technology core emerges and challenges existing technology support nets (TSNs), which are thus forced to coevolve with it. New versions of the core are designed and fitted into an increasingly appropriate TSN, with smaller and smaller high-technology effects. High technology becomes regular technology, with more efficient versions fitting the same support net. Finally, even the efficiency gains diminish, emphasis shifts to product tertiary attributes (appearance, style), and technology becomes TSN-preserving appropriate technology. This technological equilibrium state becomes established and fixated, resisting being interrupted by a technological mutation; then new high technology appears and the cycle is repeated.

Regarding this evolving process of technology, Christensen said:

The technological changes that damage established companies are usually not radically new or difficult from a technological point of view. They do, however, have two important characteristics: First, they typically present a different package of performance attributes—ones that, at least at the outset, are not valued by existing customers. Second, the performance attributes that existing customers do value improve at such a rapid rate that the new technology can later invade those established markets.

The World Bank's 2019 World Development Report on The Changing Nature of Work[25] examines how technology shapes the relative demand for certain skills in labor markets and expands the reach of firms - robotics and digital technologies, for example, enable firms to automate, replacing labor with machines to become more efficient, and innovate, expanding the number of tasks and products. Joseph Bower explained the process of how disruptive technology, through its requisite support net, dramatically transforms a certain industry.

When the technology that has the potential for revolutionizing an industry emerges, established companies typically see it as unattractive: it’s not something their mainstream customers want, and its projected profit margins aren’t sufficient to cover big-company cost structure. As a result, the new technology tends to get ignored in favor of what’s currently popular with the best customers. But then another company steps in to bring the innovation to a new market. Once the disruptive technology becomes established there, smaller-scale innovation rapidly raise the technology’s performance on attributes that mainstream customers’ value.

For example, the automobile was high technology with respect to the horse carriage; however, it evolved into technology and finally into appropriate technology with a stable, unchanging TSN. The main high-technology advance in the offing is some form of electric car—whether the energy source is the sun, hydrogen, water, air pressure, or traditional charging outlet. Electric cars preceded the gasoline automobile by many decades and are now returning to replace the traditional gasoline automobile. The printing press was a development that changed the way that information was stored, transmitted, and replicated. This allowed empowered authors but it also promoted censorship and information overload in writing technology.

Milan Zeleny described the above phenomenon. He also wrote that:

Implementing high technology is often resisted. This resistance is well understood on the part of active participants in the requisite TSN. The electric car will be resisted by gas-station operators in the same way automated teller machines (ATMs) were resisted by bank tellers and automobiles by horsewhip makers. Technology does not qualitatively restructure the TSN and therefore will not be resisted and never has been resisted. Middle management resists business process reengineering because BPR represents a direct assault on the support net (coordinative hierarchy) they thrive on. Teamwork and multi-functionality is resisted by those whose TSN provides the comfort of narrow specialization and command-driven work.

Social media could be considered a disruptive innovation within sports. More specifically, the way that news in sports circulates nowadays versus the pre-internet era where sports news was mainly on T.V., radio, and newspapers. Social media has created a new market for sports that was not around before in the sense that players and fans have instant access to information related to sports.

2.6.3 High-technology Effects of Distruptive Technologies

High technology is a technology core that changes the very architecture (structure and organization) of the components of the technology support net. High technology therefore transforms the qualitative nature of the TSN's tasks and their relations, as well as their requisite physical, energy, and information flows. It also affects the skills required, the roles played, and the styles of management and coordination—the organizational culture itself.

This kind of technology core is different from regular technology core, which preserves the qualitative nature of flows and the structure of the support and only allows users to perform the same tasks in the same way, but faster, more reliably, in larger quantities, or more efficiently. It is also different from appropriate technology core, which preserves the TSN itself with the purpose of technology implementation and allows users to do the same thing in the same way at comparable levels of efficiency, instead of improving the efficiency of performance.

As for the difference between high technology and low technology, Milan Zeleny once said:

The effects of high technology always breaks the direct comparability by changing the system itself, therefore requiring new measures and new assessments of its productivity. High technology cannot be compared and evaluated with the existing technology purely on the basis of cost, net present value or return on investment. Only within an unchanging and relatively stable TSN would such direct financial comparability be meaningful. For example, you can directly compare a manual typewriter with an electric typewriter, but not a typewriter with a word processor. Therein lies the management challenge of high technology.

However, not all modern technologies are high technologies. They have to be used as such, function as such, and be embedded in their requisite TSNs. They have to empower the individual because only through the individual can they empower knowledge. Not all information technologies have integrative effects. Some information systems are still designed to improve the traditional hierarchy of command and thus preserve and entrench the existing TSN. The administrative model of management, for instance, further aggravates the division of task and labor, further specializes knowledge, separates management from workers, and concentrates information and knowledge in centers.

As knowledge surpasses capital, labor, and raw materials as the dominant economic resource, technologies are also starting to reflect this shift. Technologies are rapidly shifting from centralized hierarchies to distributed networks. Nowadays knowledge does not reside in a super-mind, super-book, or super-database, but in a complex relational pattern of networks brought forth to coordinate human action.

2.6.4 Example of disruption

In the practical world, the popularization of personal computers illustrates how knowledge contributes to the ongoing technology innovation. The original centralized concept (one computer, many persons) is a knowledge-defying idea of the prehistory of computing, and its inadequacies and failures have become clearly apparent. The era of personal computing brought powerful computers "on every desk" (one person, one computer). This short transitional period was necessary for getting used to the new computing environment, but was inadequate from the vantage point of producing knowledge. Adequate knowledge creation and management come mainly from networking and distributed computing (one person, many computers). Each person's computer must form an access point to the entire computing landscape or ecology through the Internet of other computers, databases, and mainframes, as well as production, distribution, and retailing facilities, and the like. For the first time, technology empowers individuals rather than external hierarchies. It transfers influence and power where it optimally belongs: at the loci of the useful knowledge. Even though hierarchies and bureaucracies do not innovate, free and empowered individuals do; knowledge, innovation, spontaneity, and self-reliance are becoming increasingly valued and promoted.

Amazon Alexa, Uber, Airbnb are some other examples of disruption.

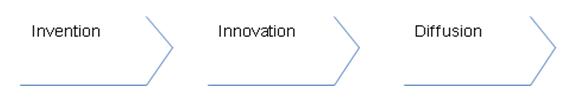

2.7 Innovation

Innovation in its modern meaning is "a new idea, creative thoughts, new imaginations in form of device or method". Innovation is often also viewed as the application of better solutions that meet new requirements, unarticulated needs, or existing market needs. Such innovation takes place through the provision of more-effective products, processes, services, technologies, or business models that are made available to markets, governments and society. An innovation is something original and more effective and, as a consequence, new, that "breaks into" the market or society. Innovation is related to, but not the same as, invention, as innovation is more apt to involve the practical implementation of an invention (ie new / improved ability) to make a meaningful impact in the market or society, and not all innovations require an invention. Innovation often[quantify] manifests itself via the engineering process, when the problem being solved is of a technical or scientific nature. The opposite of innovation is exnovation.

While a novel device is often described as an innovation, in economics, management science, and other fields of practice and analysis, innovation is generally considered to be the result of a process that brings together various novel ideas in such a way that they affect society. In industrial economics, innovations are created and found[by whom?] empirically from services to meet growing consumer demand.

Innovation also has an older historical meaning which is quite different. From the 1400s through the 1600s, prior to early American settlement, the concept of "innovation" was pejorative. It was an early modern synonym for rebellion, revolt and heresy.

2.7.1 Definition of Innovation

A 2014 survey of literature on innovation found over 40 definitions. In an industrial survey of how the software industry defined innovation, the following definition given by Crossan and Apaydin was considered to be the most complete, which builds on the Organisation for Economic Co-operation and Development (OECD) manual's definition:

Innovation is production or adoption, assimilation, and exploitation of a value-added novelty in economic and social spheres; renewal and enlargement of products, services, and markets; development of new methods of production; and the establishment of new management systems. It is both a process and an outcome.

According to Kanter, innovation includes original invention and creative use and defines innovation as a generation, admission and realization of new ideas, products, services and processes.

Two main dimensions of innovation were degree of novelty (patent) (i.e. whether an innovation is new to the firm, new to the market, new to the industry, or new to the world) and kind of innovation (i.e. whether it is processor product-service system innovation). In recent organizational scholarship, researchers of workplaces have also distinguished innovation to be separate from creativity, by providing an updated definition of these two related but distinct constructs:

Workplace creativity concerns the cognitive and behavioral processes applied when attempting to generate novel ideas. Workplace innovation concerns the processes applied when attempting to implement new ideas. Specifically, innovation involves some combination of problem/opportunity identification, the introduction, adoption or modification of new ideas germane to organizational needs, the promotion of these ideas, and the practical implementation of these ideas.

2.7.2 Inter-disciplinary Views of Innovation

2.7.2.1 Business and economics

In business and in economics, innovation can become a catalyst for growth. With rapid advancements in transportation and communications over the past few decades, the old-world concepts of factor endowments and comparative advantage which focused on an area's unique inputs are outmoded for today's global economy. Economist Joseph Schumpeter (1883–1950), who contributed greatly to the study of innovation economics, argued that industries must incessantly revolutionize the economic structure from within, that is innovate with better or more effective processes and products, as well as market distribution, such as the connection from the craft shop to factory. He famously asserted that "creative destruction is the essential fact about capitalism".[16] Entrepreneurs continuously look for better ways to satisfy their consumer base with improved quality, durability, service and price which come to fruition in innovation with advanced technologies and organizational strategies.

A prime example of innovation involved the explosive boom of Silicon Valley startups out of the Stanford Industrial Park. In 1957, dissatisfied employees of Shockley Semiconductor, the company of Nobel laureate and co-inventor of the transistor William Shockley, left to form an independent firm, Fairchild Semiconductor. After several years, Fairchild developed into a formidable presence in the sector. Eventually, these founders left to start their own companies based on their own, unique, latest ideas, and then leading employees started their own firms. Over the next 20 years, this snowball process launched the momentous startup-company explosion of information-technology firms. Essentially, Silicon Valley began as 65 new enterprises born out of Shockley's eight former employees. Since then, hubs of innovation have sprung up globally with similar metonyms, including Silicon Alley encompassing New York City.

Another example involves business incubators – a phenomenon nurtured by governments around the world, close to knowledge clusters (mostly research-based) like universities or other Government Excellence Centres – which aim primarily to channel generated knowledge to applied innovation outcomes in order to stimulate regional or national economic growth.

2.7.2.2 Organizations

In the organizational context, innovation may be linked to positive changes in efficiency, productivity, quality, competitiveness, and market share. However, recent research findings highlight the complementary role of organizational culture in enabling organizations to translate innovative activity into tangible performance improvements. Organizations can also improve profits and performance by providing work groups opportunities and resources to innovate, in addition to employee's core job tasks. Peter Drucker wrote:

Innovation is the specific function of entrepreneurship, whether in an existing business, a public service institution, or a new venture started by a lone individual in the family kitchen. It is the means by which the entrepreneur either creates new wealth-producing resources or endows existing resources with enhanced potential for creating wealth. –Drucker

According to Clayton Christensen, disruptive innovation is the key to future success in business. The organization requires a proper structure in order to retain competitive advantage. It is necessary to create and nurture an environment of innovation. Executives and managers need to break away from traditional ways of thinking and use change to their advantage. It is a time of risk but even greater opportunity. The world of work is changing with the increase in the use of technology and both companies and businesses are becoming increasingly competitive. Companies will have to downsize or reengineer their operations to remain competitive. This will affect employment as businesses will be forced to reduce the number of people employed while accomplishing the same amount of work if not more.

While disruptive innovation will typically "attack a traditional business model with a lower-cost solution and overtake incumbent firms quickly,"[26] foundational innovation is slower, and typically has the potential to create new foundations for global technology systems over the longer term. Foundational innovation tends to transform business operating models as entirely new business models emerge over many years, with gradual and steady adoption of the innovation leading to waves of technological and institutional change that gain momentum more slowly. The advent of the packet-switched communication protocol TCP/IP—originally introduced in 1972 to support a single use case for United States Department of Defense electronic communication (email), and which gained widespread adoption only in the mid-1990s with the advent of the World Wide Web—is a foundational technology.]

All organizations can innovate, including for example hospitals, universities, and local governments. For instance, former Mayor Martin O’Malley pushed the City of Baltimore to use CitiStat, a performance-measurement data and management system that allows city officials to maintain statistics on several areas from crime trends to the conditions of potholes. This system aids in better evaluation of policies and procedures with accountability and efficiency in terms of time and money. In its first year, CitiStat saved the city $13.2 million. Even mass transit systems have innovated with hybrid bus fleets to real-time tracking at bus stands. In addition, the growing use of mobile data terminals in vehicles, that serve as communication hubs between vehicles and a control center, automatically send data on location, passenger counts, engine performance, mileage and other information. This tool helps to deliver and manage transportation systems.

Still other innovative strategies include hospitals digitizing medical information in electronic medical records. For example, the U.S. Department of Housing and Urban Development's HOPE VI initiatives turned severely distressed public housing in urban areas into revitalized, mixed-income environments; the Harlem Children’s Zone used a community-based approach to educate local area children; and the Environmental Protection Agency's brownfield grants facilitates turning over brownfields for environmental protection, green spaces, community and commercial development.

Hasmath et al. have found that within local government organizations in China, the appetite to innovate may be linked to specific character types. They identify three distinct character types within the Chinese local government: authoritarian bureaucratic, a primarily older male cadre who are most likely to follow central government command; a consultative governance types that is most open to collaborating with NGOs and outside of government, and; an entrepreneurial type that is both less risk averse and demonstrates high personal efficacy.

2.7.2.3 Sources of Innovation

There are several sources of innovation. It can occur as a result of a focus effort by a range of different agents, by chance, or as a result of a major system failure.

According to Peter F. Drucker, the general sources of innovations are different changes in industry structure, in market structure, in local and global demographics, in human perception, mood and meaning, in the amount of already available scientific knowledge, etc.

In the simplest linear model of innovation the traditionally recognized source is manufacturer innovation. This is where an agent (person or business) innovates in order to sell the innovation. Specifically, R&D measurement is the commonly used input for innovation, in particular in the business sector, named Business Expenditure on R&D (BERD) that grew over the years on the expenses of the declining R&D invested by the public sector.

Another source of innovation, only now becoming widely recognized, is end-user innovation. This is where an agent (person or company) develops an innovation for their own (personal or in-house) use because existing products do not meet their needs. MIT economist Eric von Hippel has identified end-user innovation as, by far, the most important and critical in his classic book on the subject, "The Sources of Innovation".

The robotics engineer Joseph F. Engelberger asserts that innovations require only three things:

- a recognized need

- competent people with relevant technology

- financial support

However, innovation processes usually involve: identifying customer needs, macro and meso trends, developing competences, and finding financial support.

financial support.

The Kline chain-linked model of innovation places emphasis on potential market needs as drivers of the innovation process, and describes the complex and often iterative feedback loops between marketing, design, manufacturing, and R&D.

Innovation by businesses is achieved in many ways, with much attention now given to formal research and development (R&D) for "breakthrough innovations". R&D help spur on patents and other scientific innovations that leads to productive growth in such areas as industry, medicine, engineering, and government. Yet, innovations can be developed by less formal on-the-job modifications of practice, through exchange and combination of professional experience and by many other routes. Investigation of relationship between the concepts of innovation and technology transfer revealed overlap. The more radical and revolutionary innovations tend to emerge from R&D, while more incremental innovations may emerge from practice – but there are many exceptions to each of these trends.

Information technology and changing business processes and management style can produce a work climate favorable to innovation. For example, the software tool company Atlassian conducts quarterly "ShipIt Days" in which employees may work on anything related to the company's products. Google employees work on self-directed projects for 20% of their time (known as Innovation Time Off). Both companies cite these bottom-up processes as major sources for new products and features.

An important innovation factor includes customers buying products or using services. As a result, organizations may incorporate users in focus groups (user centred approach), work closely with so called lead users (lead user approach) or users might adapt their products themselves. The lead user method focuses on idea generation based on leading users to develop breakthrough innovations. U-STIR, a project to innovate Europe’s surface transportation system, employs such workshops. Regarding this user innovation, a great deal of innovation is done by those actually implementing and using technologies and products as part of their normal activities. Sometimes user-innovators may become entrepreneurs, selling their product, they may choose to trade their innovation in exchange for other innovations, or they may be adopted by their suppliers. Nowadays, they may also choose to freely reveal their innovations, using methods like open source. In such networks of innovation the users or communities of users can further develop technologies and reinvent their social meaning.

One technique for innovating a solution to an identified problem is to actually attempt an experiment with many possible solutions. This technique was famously used by Thomas Edison's laboratory to find a version of the incandescent light bulb economically viable for home use, which involved searching through thousands of possible filament designs before settling on carbonized bamboo.

This technique is sometimes used in pharmaceutical drug discovery. Thousands of chemical compounds are subjected to high-throughput screening to see if they have any activity against a target molecule which has been identified as biologically significant to a disease. Promising compounds can then be studied; modified to improve efficacy, reduce side effects, and reduce cost of manufacture; and if successful turned into treatments.

The related technique of A/B testing is often used to help optimize the design of web sites and mobile apps. This is used by major sites such as amazon.com, Facebook, Google, and Netflix. Procter & Gamble uses computer-simulated products and online user panels to conduct larger numbers of experiments to guide the design, packaging, and shelf placement of consumer products. Capital One uses this technique to drive credit card marketing offers.

2.7.2.4 Goals and Failures of Innovation

Programs of organizational innovation are typically tightly linked to organizational goals and objectives, to the business plan, and to market competitive positioning. One driver for innovation programs in corporations is to achieve growth objectives. As Davila et al. (2006) notes, "Companies cannot grow through cost reduction and reengineering alone... Innovation is the key element in providing aggressive top-line growth, and for increasing bottom-line results".

One survey across a large number of manufacturing and services organizations found, ranked in decreasing order of popularity, that systematic programs of organizational innovation are most frequently driven by: improved quality, creation of new markets, extension of the product range, reduced labor costs, improved production processes, reduced materials, reduced environmental damage, replacement of products/services, reduced energy consumption, conformance to regulations.

These goals vary between improvements to products, processes and services and dispel a popular myth that innovation deals mainly with new product development. Most of the goals could apply to any organization be it a manufacturing facility, marketing company, hospital or government. Whether innovation goals are successfully achieved or otherwise depends greatly on the environment prevailing in the organization.

Conversely, failure can develop in programs of innovations. The causes of failure have been widely researched and can vary considerably. Some causes will be external to the organization and outside its influence of control. Others will be internal and ultimately within the control of the organization. Internal causes of failure can be divided into causes associated with the cultural infrastructure and causes associated with the innovation process itself. Common causes of failure within the innovation process in most organizations can be distilled into five types: poor goal definition, poor alignment of actions to goals, poor participation in teams, poor monitoring of results, poor communication and access to information.

2.7.3 Diffusion of Innovation

Diffusion of innovation research was first started in 1903 by seminal researcher Gabriel Tarde, who first plotted the S-shaped diffusion curve. Tarde defined the innovation-decision process as a series of steps that include:

- knowledge

- forming an attitude

- a decision to adopt or reject

- implementation and use

- confirmation of the decision

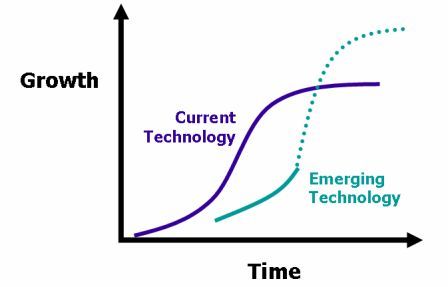

Once innovation occurs, innovations may be spread from the innovator to other individuals and groups. This process has been proposed that the lifecycle of innovations can be described using the 's-curve' or diffusion curve. The s-curve maps growth of revenue or productivity against time. In the early stage of a particular innovation, growth is relatively slow as the new product establishes itself. At some point, customers begin to demand and the product growth increases more rapidly. New incremental innovations or changes to the product allow growth to continue. Towards the end of its lifecycle, growth slows and may even begin to decline. In the later stages, no amount of new investment in that product will yield a normal rate of return

the lifecycle of innovations can be described using the 's-curve' or diffusion curve. The s-curve maps growth of revenue or productivity against time. In the early stage of a particular innovation, growth is relatively slow as the new product establishes itself. At some point, customers begin to demand and the product growth increases more rapidly. New incremental innovations or changes to the product allow growth to continue. Towards the end of its lifecycle, growth slows and may even begin to decline. In the later stages, no amount of new investment in that product will yield a normal rate of return

The s-curve derives from an assumption that new products are likely to have "product life" – ie, a start-up phase, a rapid increase in revenue and eventual decline. In fact, the great majority of innovations never get off the bottom of the curve, and never produce normal returns.

Innovative companies will typically be working on new innovations that will eventually replace older ones. Successive s-curves will come along to replace older ones and continue to drive growth upwards. In the figure above the first curve shows a current technology. The second shows an emerging technology that currently yields lower growth but will eventually overtake current technology and lead to even greater levels of growth. The length of life will depend on many factors.

2.7.4 Measures of Innovation

Measuring innovation is inherently difficult as it implies commensurability so that comparisons can be made in quantitative terms. Innovation, however, is by definition novelty. Comparisons are thus often meaningless across products or service. Nevertheless, Edison et al. in their review of literature on innovation management found 232 innovation metrics. They categorized these measures along five dimensions; ie inputs to the innovation process, output from the innovation process, effect of the innovation output, measures to access the activities in an innovation process and availability of factors that facilitate such a process.

There are two different types of measures for innovation: the organizational level and the political level.

2.7.4.1 Organizational level

The measure of innovation at the organizational level relates to individuals, team-level assessments, and private companies from the smallest to the largest company. Measure of innovation for organizations can be conducted by surveys, workshops, consultants, or internal benchmarking. There is today no established general way to measure organizational innovation. Corporate measurements are generally structured around balanced scorecards which cover several aspects of innovation such as business measures related to finances, innovation process efficiency, employees' contribution and motivation, as well benefits for customers. Measured values will vary widely between businesses, covering for example new product revenue, spending in R&D, time to market, customer and employee perception & satisfaction, number of patents, additional sales resulting from past innovations.

2.7.4.2 Political Level

For the political level, measures of innovation are more focused on a country or region competitive advantage through innovation. In this context, organizational capabilities can be evaluated through various evaluation frameworks, such as those of the European Foundation for Quality Management. The OECD Oslo Manual (1992) suggests standard guidelines on measuring technological product and process innovation. Some people consider the Oslo Manual complementary to the Frascati Manual from 1963. The new Oslo Manual from 2018 takes a wider perspective to innovation, and includes marketing and organizational innovation. These standards are used for example in the European Community Innovation Surveys.

Other ways of measuring innovation have traditionally been expenditure, for example, investment in R&D (Research and Development) as percentage of GNP (Gross National Product). Whether this is a good measurement of innovation has been widely discussed and the Oslo Manual has incorporated some of the critique against earlier methods of measuring. The traditional methods of measuring still inform many policy decisions. The EU Lisbon Strategy has set as a goal that their average expenditure on R&D should be 3% of GDP.

2.7.4.3 Indicators of Innovations

Many scholars claim that there is a great bias towards the "science and technology mode" (S&T-mode or STI-mode), while the "learning by doing, using and interacting mode" (DUI-mode) is ignored and measurements and research about it rarely done. For example, an institution may be high tech with the latest equipment, but lacks crucial doing, using and interacting tasks important for innovation.

A common industry view (unsupported by empirical evidence) is that comparative cost-effectiveness research is a form of price control which reduces returns to industry, and thus limits R&D expenditure, stifles future innovation and compromises new products access to markets.[55] Some academics claim cost-effectiveness research is a valuable value-based measure of innovation which accords "truly significant" therapeutic advances (ie providing "health gain") higher prices than free market mechanisms. Such value-based pricing has been viewed as a means of indicating to industry the type of innovation that should be rewarded from the public purse.

An Australian academic developed the case that national comparative cost-effectiveness analysis systems should be viewed as measuring "health innovation" as an evidence-based policy concept for valuing innovation distinct from valuing through competitive markets, a method which requires strong anti-trust laws to be effective, on the basis that both methods of assessing pharmaceutical innovations are mentioned in annex 2C.1 of the Australia-United States Free Trade Agreement.

2.7.4.4 Indices of Innovation

Several indices attempt to measure innovation and rank entities based on these measures, such as:

- Bloomberg Innovation Index

- "Bogota Manual" similar to the Oslo Manual, is focused on Latin America and the Caribbean countries.

- "Creative Class" developed by Richard Florida

- EIU Innovation Ranking

- Global Competitiveness Report

- Global Innovation Index (GII), by INSEAD

- Information Technology and Innovation Foundation (ITIF) Index

- Innovation 360 – From the World Bank. Aggregates innovation indicators (and more) from a number of different public sources

- Innovation Capacity Index (ICI) published by a large number of international professors working in a collaborative fashion. The top scorers of ICI 2009–2010 were: 1. Sweden 82.2; 2. Finland 77.8; and 3. United States 77.5

- Innovation Index, developed by the Indiana Business Research Center, to measure innovation capacity at the county or regional level in the United States

- Innovation Union Scoreboard

- innovationsindikator for Germany, developed by the Federation of German Industries (Bundesverband der Deutschen Industrie) in 2005

- INSEAD Innovation Efficacy Index

- International Innovation Index, produced jointly by The Boston Consulting Group, the National Association of Manufacturers (NAM) and its nonpartisan research affiliate The Manufacturing Institute, is a worldwide index measuring the level of innovation in a country; NAM describes it as the "largest and most comprehensive global index of its kind"

- Management Innovation Index – Model for Managing Intangibility of Organizational Creativity: Management Innovation Index

- NYCEDC Innovation Index, by the New York City Economic Development Corporation, tracks New York City's "transformation into a center for high-tech innovation. It measures innovation in the City's growing science and technology industries and is designed to capture the effect of innovation on the City's economy"

- OECD Oslo Manual is focused on North America, Europe, and other rich economies

- State Technology and Science Index, developed by the Milken Institute, is a U.S.-wide benchmark to measure the science and technology capabilities that furnish high paying jobs based around key components

- World Competitiveness Scoreboard

2.7.4.5 Rankings of Innovation

Many research studies try to rank countries based on measures of innovation. Common areas of focus include: high-tech companies, manufacturing, patents, post secondary education, research and development, and research personnel. The left ranking of the top 10 countries below is based on the 2016 Bloomberg Innovation Index. However, studies may vary widely; for example the Global Innovation Index 2016 ranks Switzerland as number one wherein countries like South Korea and Japan do not even make the top ten.

2.7.4.6 Future of Innovation

In 2005 Jonathan Huebner, a physicist working at the Pentagon's Naval Air Warfare Center, argued on the basis of both U.S. patents and world technological breakthroughs, per capita, that the rate of human technological innovation peaked in 1873 and has been slowing ever since. In his article, he asked "Will the level of technology reach a maximum and then decline as in the Dark Ages?" In later comments to New Scientist magazine, Huebner clarified that while he believed that we will reach a rate of innovation in 2024 equivalent to that of the Dark Ages, he was not predicting the reoccurrence of the Dark Ages themselves.

John Smart criticized the claim and asserted that technological singularity researcher Ray Kurzweil and others showed a "clear trend of acceleration, not deceleration" when it came to innovations. The foundation replied to Huebner the journal his article was published in, citing Second Life and eHarmony as proof of accelerating innovation; to which Huebner replied. However, Huebner's findings were confirmed in 2010 with U.S. Patent Office data. and in a 2012 paper.

2.7.4.7 Innovation and Development

The theme of innovation as a tool to disrupting patterns of poverty has gained momentum since the mid-2000s among major international development actors such as DFID, Gates Foundation's use of the Grand Challenge funding model, and USAID's Global Development Lab. Networks have been established to support innovation in development, such as D-Lab at MIT. Investment funds have been established to identify and catalyze innovations in developing countries, such as DFID's Global Innovation Fund, Human Development Innovation Fund, and (in partnership with USAID) the Global Development Innovation Ventures.

2.7.5 Government Policies